Recently, people have shown that large-scale pre-training from diverse internet-scale data is the key

to building a generalist model, as witnessed in the natural language processing (NLP) area.

To build an embodied generalist agent, we, as well as many other researchers, hypothesize that such

foundation prior is also an indispensable component. However, it is unclear what is the proper concrete

form we should represent those embodied foundation priors and how those priors should be used in the

downstream task.

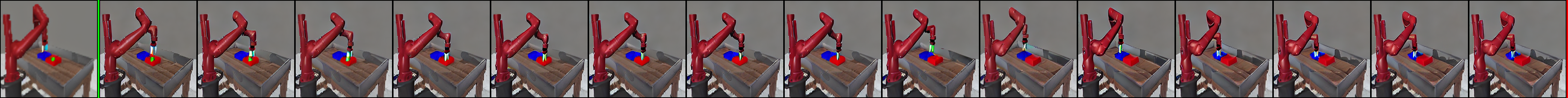

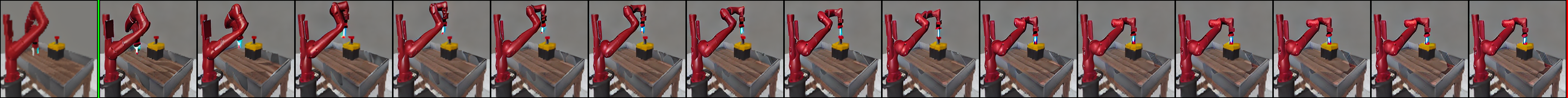

In this paper, we propose an intuitive and effective set of embodied priors that consist of foundation

policy, foundation value, and foundation success reward. The proposed priors are based on the

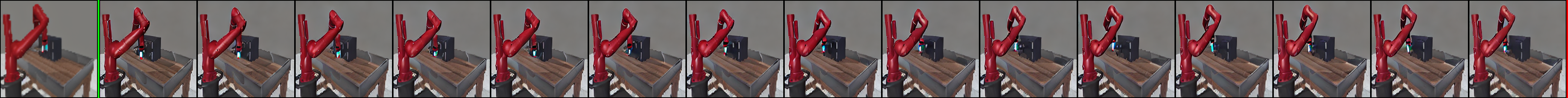

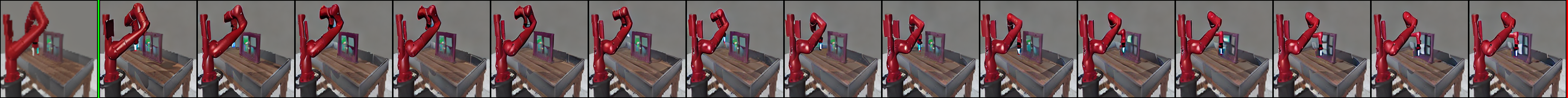

goal-conditioned Markov decision process formulation of the task. To verify the effectiveness of the proposed

priors, we instantiate an actor-critic method with the assistance of the priors, called Foundation

Actor-Critic (FAC). We name our framework as Foundation Reinforcement Learning (FRL), since our framework

completely relies on embodied foundation priors to explore, learn and reinforce.

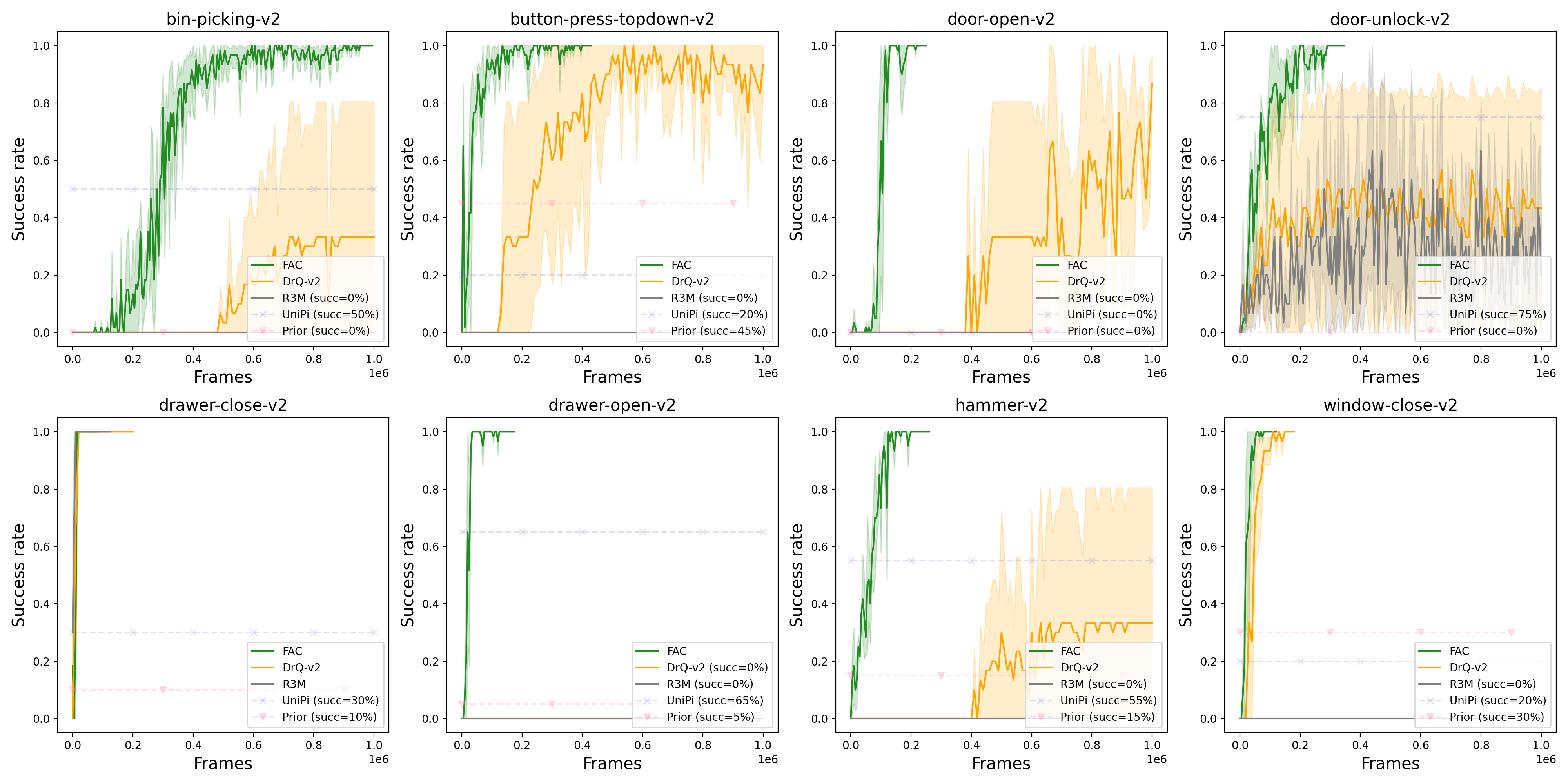

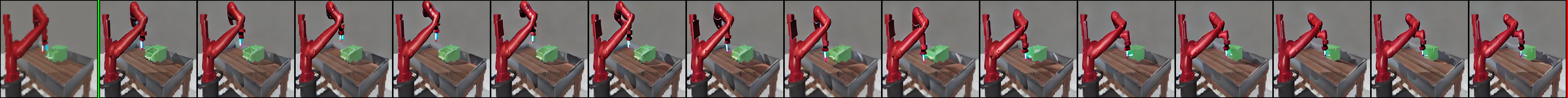

The benefits of our framework are threefold. (1) Sample efficient learning. With the foundation prior,

FAC learns significantly faster than traditional RL. Our evaluation on the Meta-World has proved that FAC

can achieve 100% success rates for 7/8 tasks under less than 200k frames, which outperforms the baseline

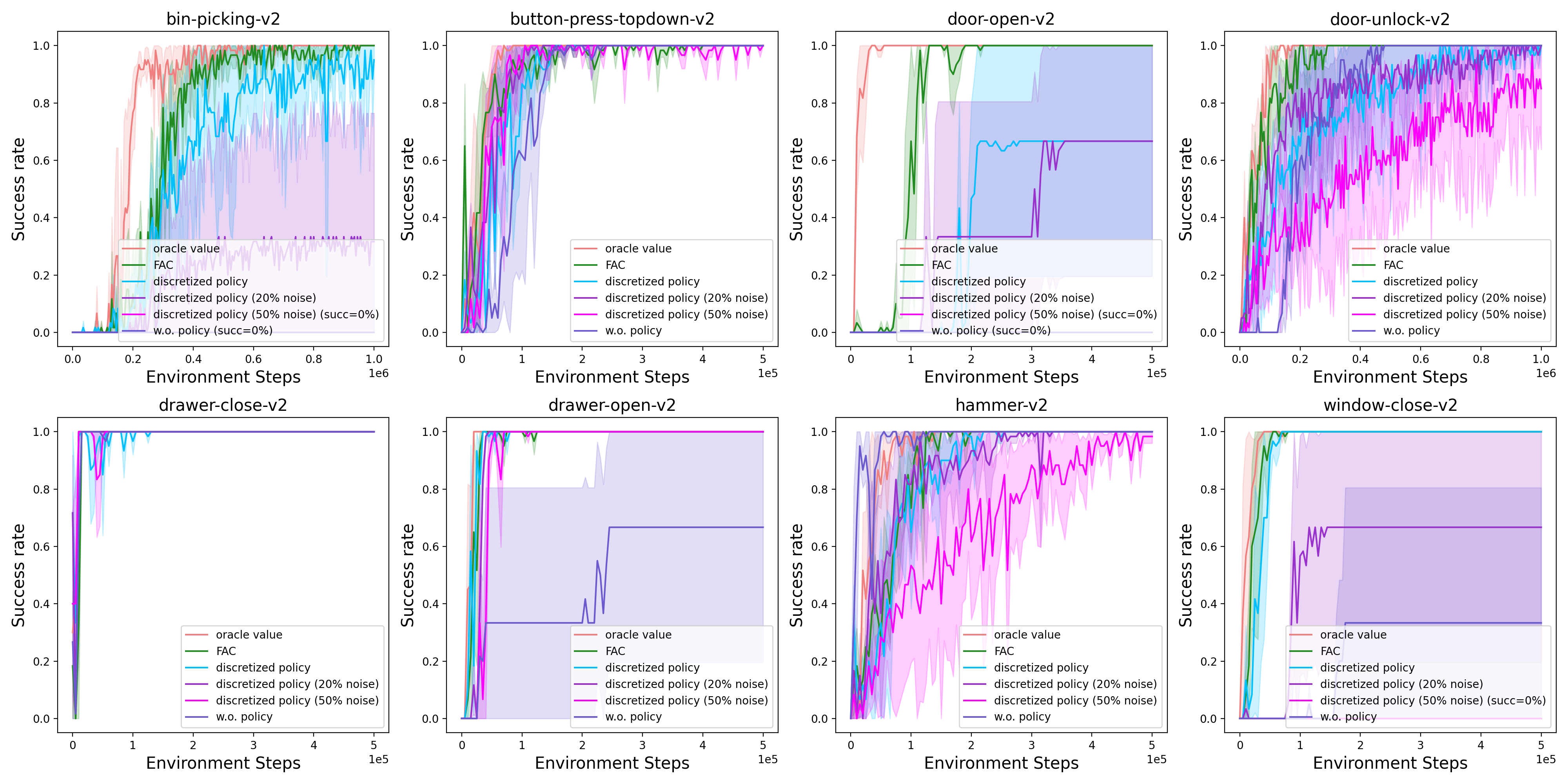

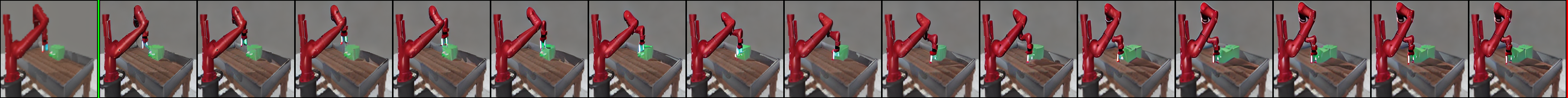

method with careful manual-designed rewards under 1M frames. (2) Robust to noisy priors. Our method

tolerates the unavoidable noise in embodied foundation models. We have shown that FAC works well even under

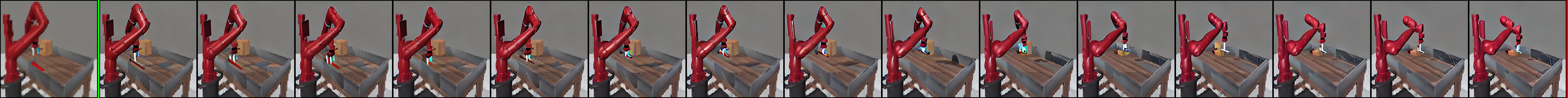

heavy noise or quantization errors. (3) Minimal human intervention: FAC completely learns from the

foundation priors, without the need of human-specified dense reward, or providing teleoperated

demonstrations. Thus, FAC can be easily scaled up. We believe our FRL framework could enable the future

robot to autonomously explore and learn without human intervention in the physical world. In summary, our

proposed FRL framework is a novel and powerful learning paradigm, towards achieving an embodied generalist

agent.